Modern approach to AI

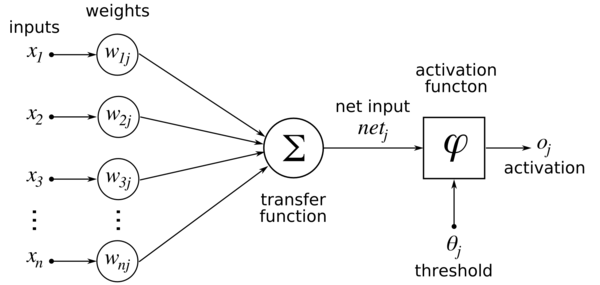

Many years ago I met artificial neuron. Like this one:

source: WikiBooks

First, I was impressed. But later, during implementation of first artificial neural network, I realized it is terribly poor model. My network was not able to handle simplest recognition tasks. The same result I got with genetic algorithms.

After that experience I lost all hope in artificial intelligence. I had feeling that there is no chance in near future to construct anything useful because brain is “extremely powerful bowl of spaghetti”. Completely chaotic structure and there is no way to describe it, understand or even simulate.

Later I noticed the buzz about Deep learning. You know - Google, Microsoft, … bandwagon. After short check I found out that Deep learning is old neural network model on steroids. So intuitively I decided to ignore this trend.

My mind was changed by 2 independent researchers working on novel way of AI inspired by progress in biology. Basic ideas are following:

- Almost everything what brain (neocortex especially) learns can be described by sequences. When brain see eye-nose-eye-mouth it may infer that it looks on face. This is true for speech, music, sense of touch, … E.g. when you hear a few tones of song, you can quickly infer its name.

- This sequences are stored hierarchically. Eye from example above is composed from eyelid-pupil-sclera-eyelash. And each of them may be further divided to the level of pixels coming from retina. This organization is extremely efficient.

- Sequence is noise-canceling and predicting tool. If you see only one eye in example above, but other is hidden by some object (noise), you can still detect face.

- Sequences can be played back. For example name of fairy tale can be unwind into chapters, chapters into sentences, sentences into words and words into vowels&consonants and further into muscle commands.

- It uses unsupervised learning. It just get constant stream of data going from sensors (eye, ear, touch, pain, taste, …) and it starts to detect patterns-sequences in this data and build hierarchy of them. All on-the-fly.

- Similar concepts are bound together and that provides tooling for invariant object detection. E.g. car seen from distance of 5 meters is the same concept as car seen from distance of 100 meters.

First I have found Rebel Science by Louis Savain. This guy is … well … bit eccentric. He claims his knowledge about brain comes from Bible - he found some hidden messages during studying it. For this reason and many others (he has strong opinions about IT, physics, … too) is ridiculed by most of his blog visitors. But I read his posts and model he proposed seemed reasonable for me. Check Secrets of the Holy Grail as starter. Unfortunately I was never fully able to follow Louis thoughts so I partially failed when I was trying to construct neural network based on his ideas. My text pattern detection tool had success rate about 50%.

In one post Louis referred another guy called Jeff Hawkins. He is basically using similar principle and describes it in book On Intelligence. I red that book and it is great in chapters where he describes generic principles of AI based on hierarchical sequences model. Unfortunately in book is no meaningful guide to build own model. This has changed by this document about Hierarchical Temporal Memory and especially Why Neurons Have Thousands of Synapses. Jeff owns company called Numenta which offers open source tool called NuPIC. I guess they have some basic flaw in their models. Why? Because if it would work as it is supposed to it would be ultimate breakthrough.

So I decided to try to build own implementation of principles of this two gentlemen. If nothing else I will learn more information about brain. I am the “learning-by-doing” kind of person. So this is natural way for me :-) Like it or not.

I also decided that I will record my thoughts on this blog. Maybe it will help also to other people on the way to the true artificial intelligence.

Tags: